Computational Models of Behavior, part 1

Image credit: Gerd Altmann from Pixabay

Image credit: Gerd Altmann from PixabayIntroduction

It is pretty clear that neuroscience is shaping the artificial intelligence (AI) field by means of new research questions and ideas. But at the same time AI also has a huge impact on the neuroscience field. Over the last decades reinforcement learning (RL) algorithms have been particularly useful in attempts to understand how humans and animals make decisions and they’ve provided methods to quantify the parameters of behavioral data, which could be also correlated to neuronal data. For example, temporal difference (TD) learning model has highlighted the role of dopamine in decision-making in a classical study of Schultz (1998)1.

Even though RL methods have been applied in a neuroscience field for quite some time, some may still be unsure how these methods can help with answering research questions or how to actually apply modeling in practice. While there are already several comprehensive articles on how to apply RL models to decision-making data, the purpose of this post is to provide an actual example of model fitting with coding guidelines and also to compare two main approaches, maximum likelihood estimation (MLE) and Bayesian method. Part 1 will focus on the MLE approach with the example code in Python, whereas part 2 will focus on the Bayesian approach written in R with the help of hBayesDM package. Both parts should be viewed as an overview, and not as means of selecting the one-size-fits-all approach. The explanations and basics of theory will be provided, however for a more in-depth overview reader might want to refer to Lockwood et al. (2021)2, Daw (2011)3 and Wilson et al. (2019)4.

Objectives:

- How to answer research questions using modelling.

- How to fit a RL model to behavioral data using MLE.

- How to compare models and choose the best fitting one.

- How to generate the data using RL model.

Code

1import itertools

2import numpy as np

3import pandas as pd

4import seaborn as sns

5import matplotlib.pyplot as plt

6import pingouin as pg

7from tqdm import tqdm

8from scipy.special import softmax, log_softmax

9from scipy.optimize import minimize

10from scipy.stats import chisquare

11

12

13plt.style.use('bmh')

14plt.rcParams['figure.facecolor'] = 'white'

Research question:

We hypothesize that treatment (could be anything, dopamine manipulations, exposure to stress, etc.) affects humans’ reward sensitivity and the learning rate for a positive outcome (the speed of learning where the optimal choice is).

Paradigm:

We could test this using so-called multi-armed bandit (MAB) paradigm, with a slight modification. In our case, each “arm” can bring reward and punishment at the same time for each trial. The probabilities of outcomes are stationary over time. Subject has to make a decision (choose an “arm”) at each trial. We will use a 4-armed bandit.

We will also have two groups, control (CTR) and treatment (TRT), and compare the parameters between them.

Data generation

For this article, we will generate artificial data, but we will treat it as real-life data (at least most of the time). In other words, we will generate data with a set of parameter values, but then we will try to recover them using the modeling. On the one hand, this will allow to perform a quantitative assessment of model fitting techniques (since we know the ground truth). On the other hand, we can see how to perform a model fitting in a “real-life”.

We will use the model adapted from Seymour et al. (2012)5. Parameters of the model are:

- learning rate for reward outcomes (how fast agent learns from the positive feedback)

- learning rate for punishment outcomes (how fast agent learns from the negative feedback)

- reward sensitivity (how agent perceives positive feedback)

- punishment sensitivity (how agent perceives negative feedback)

Model algorithm:

- for each step in episode do

- Select action using softmax policy

- Observe reward and punishment values

- for each action in possible actions do

- if == do

- else

- end

- if == do

- end

- end

Code

1def generate_agent_data(

2 alpha_rew, alpha_pun, rew_sens,

3 pun_sens, rew_prob, pun_prob, rew_vals,

4 n_arms=4, n_trials=100):

5 """

6 Simulate agent data for N-armed bandit task.

7

8 Arguments

9 ----------

10 alpha_rew : float

11 Learning rate for rewarding trials. Should be in a (0, 1) range.

12

13 alpha_pun : float

14 Learning rate for punishing trials. Should be in a (0, 1) range.

15

16 rew_sens : float

17 Reward sensitivity (R)

18

19 pun_sens : float

20 Punishment sensitivity (P)

21

22 rew_prob : array_like

23 Probability of reward for each arm.

24

25 pun_prob : array_like

26 Probability of punishment for each arm.

27

28 rew_vals : array_like

29 Values of reward (first element) and punishment (second element).

30 For example [100, -50] means that agent receives 100 a.u. during

31 the reward and looses 50 a.u. during the punishment.

32

33 n_arms : int, default=4

34 Number of arms in N-armed bandit task.

35

36 n_trials : int, default=100

37 Number of simulated trials for an agent

38

39 Returns

40 ----------

41 actions : array of shape (n_trials,)

42 Selected action by the agent at each trial.

43

44 gains : array of shape (n_trials,)

45 Agent's reward value at each trial.

46

47 losses : array of shape (n_trials,)

48 Agent's punishment value at each trial.

49

50 Qs : array of shape (n_trials, n_arms)

51 Value function for each arm at each trial.

52 """

53

54 actions = np.zeros(shape=(n_trials,), dtype=np.int32)

55 gains = np.zeros(shape=(n_trials,), dtype=np.int32)

56 losses = np.zeros(shape=(n_trials,), dtype=np.int32)

57 Qs = np.zeros(shape=(n_trials, n_arms))

58

59 # initial uniform values for both reward and punishment value function

60 Q_rew = np.ones(shape=(n_arms,))

61 Q_rew /= n_arms

62

63 Q_pun = np.ones(shape=(n_arms,))

64 Q_pun /= n_arms

65

66 Qs[0] = Q_rew + Q_pun

67

68 for i in range(n_trials):

69

70 # choose the action based of softmax function

71 prob_a = softmax(Qs[i])

72 a = np.random.choice(a=range(n_arms), p=prob_a) # select the action

73 # list of actions that were not selected

74 a_left = list(range(n_arms))

75 a_left.remove(a)

76

77 # reward

78 if np.random.rand() < rew_prob[a]: # if arm brings reward

79 r = rew_vals[0]

80 else:

81 r = 0

82

83 gains[i] = r

84 # value function update for a chosen arm

85 Q_rew[a] += alpha_rew * (rew_sens*r - Q_rew[a])

86 # value function update for non-chosen arms

87 for a_l in a_left:

88 Q_rew[a_l] += alpha_rew*(-Q_rew[a_l])

89

90 # punishment

91 if np.random.rand() < pun_prob[a]: # if arm brings punishment

92 r = rew_vals[1]

93 else:

94 r = 0

95

96 losses[i] = r

97 Q_pun[a] += alpha_pun * (pun_sens*r - Q_pun[a])

98 for a_l in a_left:

99 Q_pun[a_l] += alpha_pun*(-Q_pun[a_l])

100

101 # save the records

102 actions[i] = a

103

104 if i < n_trials-1:

105 Qs[i+1] = Q_rew + Q_pun

106

107 return actions, gains, losses, Qs

Initial parameters:

- Probabilities of reward for each option are: 10%, 30%, 60% and 90%.

- Probabilities of punishment for each option are: 90%, 60%, 30% and 10%.

- Value of the reward is , value of the punishment is .

- Each group (CTR and TRT) will consist of 10 subjects.

- Each subject will perform 200 trials.

We can see, that an “ideal” agent would learn that 4-th option is the best choice to maximize the total reward, since it has the highest probability of reward and lowest probability of punishment.

Code

1# initial parameters

2rew_probs = np.array([.1, .3, .6, .9]) # probability of reward of each arm

3pun_probs = np.array([.9, .6, .3, .1]) # probability of punishment of each arm

4rew_vals = np.array([1, -1]) # reward = 1, punishment = -1

5n_subj = 10 # n subjects in each group

6n_arms = 4

7n_trials = 200

8

9# ids of agents

10ids = {'Control': list(range(1, 11)),

11 'Treatment': list(range(11, 21))}

12

13params = ['alpha_rew', 'alpha_pun', 'R', 'P']

14param_names = {

15 'alpha_rew': 'Learning rate (reward)',

16 'alpha_pun': 'Learning rate (punishment)',

17 'R': 'Reward sensitivity',

18 'P': 'Punishment sensitivity'}

1# data generation

2np.random.seed(1)

3agent_data = pd.DataFrame()

4true_params = {}

5

6for subj in itertools.chain(*ids.values()):

7

8 true_params[subj] = {}

9

10 if subj in ids['Control']:

11 # control group

12 group = 'Control'

13 alpha_rew = np.random.randint(low=30, high=50) / 100 # RV in [0.3, 0.5]

14 alpha_pun = np.random.randint(low=20, high=40) / 100 # RV in [0.2, 0.4]

15 rew_sens = np.random.randint(low=20, high=50) / 10 # RV in [2., 5.]

16 pun_sens = np.random.randint(low=30, high=70) / 10 # RV in [3., 7.]

17 elif subj in ids['Treatment']:

18 # treatment group

19 group = 'Treatment'

20 alpha_rew = np.random.randint(low=10, high=25) / 100 # RV in [0.1, 0.25]

21 alpha_pun = np.random.randint(low=20, high=40) / 100 # RV in [0.2, 0.4]

22 rew_sens = np.random.randint(low=15, high=50) / 10 # RV in [1., 5.]

23 pun_sens = np.random.randint(low=30, high=70) / 10 # RV in [3., 7.]

24 else:

25 raise ValueError(f'No subject with id {subj} found!')

26

27 actions, gain, loss, Qs = generate_agent_data(

28 alpha_rew=alpha_rew,

29 alpha_pun=alpha_pun,

30 rew_sens=rew_sens,

31 pun_sens=pun_sens,

32 rew_prob=rew_probs,

33 pun_prob=pun_probs,

34 rew_vals=rew_vals,

35 n_trials=n_trials)

36

37 # add generated data to temporary data frame

38 temp_df = pd.DataFrame({

39 'group': group, 'subjID': subj, 'trial': range(1, n_trials+1),

40 'choice': actions+1, 'gain': gain, 'loss': loss})

41

42 agent_data = agent_data.append(temp_df)

43

44 # update dictionary with true parameter values

45 true_params[subj]['alpha_rew'] = alpha_rew

46 true_params[subj]['alpha_pun'] = alpha_pun

47 true_params[subj]['R'] = rew_sens

48 true_params[subj]['P'] = pun_sens

Sample of generated data:

| group | subjID | trial | choice | gain | loss | |

|---|---|---|---|---|---|---|

| 0 | Control | 1 | 1 | 1 | 0 | -1 |

| 1 | Control | 1 | 2 | 1 | 0 | -1 |

| 2 | Control | 1 | 3 | 3 | 1 | 0 |

| 3 | Control | 1 | 4 | 3 | 1 | 0 |

| 4 | Control | 1 | 5 | 1 | 0 | -1 |

| 5 | Control | 1 | 6 | 3 | 1 | -1 |

| 6 | Control | 1 | 7 | 4 | 0 | 0 |

| 7 | Control | 1 | 8 | 3 | 0 | 0 |

| 8 | Control | 1 | 9 | 1 | 1 | -1 |

| 9 | Control | 1 | 10 | 4 | 1 | 0 |

Even though this data is generated, that’s the exact data you can get from a real behavioral experiment. Each row in a dataset represents a trial for a particular subject. choice column represents the option chosen, gain and loss represent the reward and punishment values for a given trial. Full generated data set can be found here.

Code

1true_params_df = pd.DataFrame(true_params).T.reset_index(drop=False)

2true_params_df.rename(columns={'index': 'subjID'}, inplace=True)

3true_params_df['group'] = true_params_df['subjID'].apply(

4 lambda x: 'Control' if x in ids['Control'] else (

5 'Treatment' if x in ids['Treatment'] else 'Undefined'

6 )

7)

1# plot boxplots with true parameter values for each group

2plt.figure(figsize=(11,6))

3for (i, param) in enumerate(params):

4 plt.subplot(2,2,i+1)

5 sns.boxplot(

6 y=param, x='group',

7 data=true_params_df, linewidth=3,

8 palette='Set3')

9 sns.stripplot(

10 y=param, x='group', data=true_params_df,

11 jitter=0.1, linewidth=2, palette='Set3')

12 if i < 2:

13 plt.xlabel('')

14 plt.ylim([0, .55]) # use the same scale for each parameter type

15 else:

16 plt.xlabel('Group', fontweight='bold')

17 plt.ylim([1, 8])

18

19 plt.ylabel('')

20 plt.title(param_names[param], fontweight='bold')

21

22plt.suptitle('True parameters of agents', fontsize=15, fontweight='bold')

23plt.tight_layout()

24plt.show()

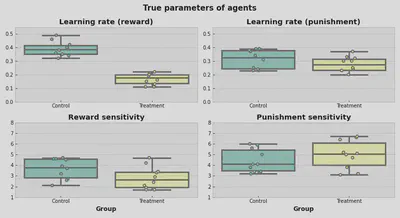

That’s how true parameters are distributed. Visually, it looks like TRT group on average has a lower learning rate for positive feedback compared to CTR.

Maximum Likelihood Estimation

Now we want to recover the parameters of subjects and basically check if our hypothesis is true and treatment affects the learning rate for positive feedback and sensitivity to a reward. As told before, there are two main approaches to do this, fitting the model using MLE and Bayesian way. Here we will focus on the MLE.

The idea of MLE is pretty simple, however, math under the hood is a bit more sophisticated. We want to find such set of parameters , that is more likely to be true. In a simplified version, one can start it by choosing 4 random values for , putting them into the algorithm described in data generation part, and then answering the question “What is the probability that actions were selected given these four parameters” (or in a notation way, ). After this, one would have to change the values and answer the same question again for a new set of values. After of such runs, the winning set of parameters is the one that gives the highest probability of the data occurrence. Or in other words, it is the maximum likelihood estimator.

But of course, no one would do that by hand and we can use function optimization techniques to deal with it. In Python we can use minimize function from SciPy package to find the estimated values with the help of limited-memory Broyden–Fletcher–Goldfarb–Shanno algorithm. This algorithm is a variation of a gradient descent optimization method.

Since the function is looking for a minimum value, we will change the sign of likelihood in our function (and now function minimize will find the minimum value, while it is actually the highest). Also, we are going to use the logarithm of likelihood, not the likelihood value itself.

Code

1def log_lik(x, *args):

2 """

3 Sum of negative log likelihoods over all trials.

4

5 Arguments

6 ----------

7 x : tuple, list

8 Parameters to estimate by MLE fit.

9

10 args: tuple, list

11 Input parameters for the model.

12

13 Returns

14 ----------

15 Sum of negative log likelihoods.

16 """

17

18 alpha_rew, alpha_pun, rew_sens, pun_sens = x # parameters to estimate

19 n_arms, actions, gain, loss = args # input values

20

21 Q_rew = np.ones(shape=(n_arms,))

22 Q_rew /= n_arms

23

24 Q_pun = np.ones(shape=(n_arms,))

25 Q_pun /= n_arms

26

27 log_prob_actions = np.zeros(shape=(len(actions), n_arms))

28

29 for i, (a, g, l) in enumerate(zip(actions, gain, loss)):

30

31 a_left = list(range(n_arms)) # actions that were not selected

32 a_left.remove(a)

33

34 log_prob_action = log_softmax(Q_rew + Q_pun)

35 log_prob_actions[i] = log_prob_action[a]

36

37 Q_rew[a] += alpha_rew * (rew_sens*g - Q_rew[a])

38 for a_l in a_left:

39 Q_rew[a_l] += alpha_rew*(-Q_rew[a_l])

40

41 Q_pun[a] += alpha_pun * (pun_sens*l - Q_pun[a])

42 for a_l in a_left:

43 Q_pun[a_l] += alpha_pun*(-Q_pun[a_l])

44

45 return -np.sum(log_prob_actions)

46

47

48def mle_fit(x0, fun, params, bounds):

49 """

50 Fit model parameters using MLE approach.

51

52 Arguments

53 ----------

54 x0 : array-like

55 Initial value for each of the parameter.

56

57 fun : function

58 Function name to use for optimization.

59

60 params : list

61 List of parameter names.

62

63 bounds : tuple

64 Tuple of the lower and upper bounds for parameters.

65

66 Returns

67 ----------

68 output : dict

69 Dictionary with the estimated parameters for each agent.

70

71 Example call

72 ----------

73 mle_fit(

74 x0=[0.5, 2], fun=logLike,

75 params=['alpha', 'beta'],

76 bounds=((0.1, 1), (0, 10))

77 """

78

79 output = {}

80

81 for subj in tqdm(agent_data['subjID'].unique(), desc=f'Fit with x0: {x0}'):

82

83 output[subj] = {}

84 cond = agent_data['subjID'] == subj

85 actions = (agent_data[cond]['choice'] - 1).to_list()

86 gain = agent_data[cond]['gain'].to_list()

87 loss = agent_data[cond]['loss'].to_list()

88

89 result = minimize(

90 fun=fun, x0=x0,

91 method='L-BFGS-B', # Limited-memory Broyden–Fletcher–Goldfarb–Shanno algorithm

92 args=(n_arms, actions, gain, loss),

93 bounds=bounds)

94

95 for (j,param) in enumerate(params):

96 output[subj][param] = result.x[j]

97 output[subj]['likelihood'] = result.fun

98

99 return output

100

101

102def best_params(all_params_list):

103 """

104 Find the best fitting parameters from a set of parameters.

105

106 Arguments

107 ----------

108 all_params_list : list

109 List with the dictionaries of fitted value.

110 Each dictionary should look as following:

111 {<subject>: {<parameter1>: <value>, ..., <likelihood>: <value>}}

112

113 Returns

114 ----------

115 selected_params : dict

116 Dictionary with the best fitted values.

117 """

118

119 # dictionary {<subject>: [<likelihood1>, <likelihood2>, ...]}

120 likelihoods = {}

121 for subj in itertools.chain(*ids.values()):

122 likelihoods[subj] = []

123 for param_set in all_params_list:

124 likelihoods[subj].append(param_set[subj]['likelihood'])

125

126 # select the lowest log likelihood among given models

127 # {<subject>: <index of a set with the lowest log likelihood>}

128 selected_set = {subj: np.argmin(lik) for (subj, lik) in likelihoods.items()}

129

130 # new set of parameters for each subject with best fitted values

131 selected_params = {}

132 for subj in selected_set.keys():

133 selected_params[subj] = all_params_list[selected_set[subj]][subj]

134

135 return selected_params

Different starting values

Before we start fitting, there is one big issue with MLE that we should be aware of. The algorithm can be stuck in a local minimum, meaning that the found set of parameters is not optimal in the entire parameter space. One of the ways to deal with this problem is to use several starting points. Here we will run MLE for 10 random starting values and choose the set that gives the minimum negative log likelihood for each subject.

We will also use bounds for the parameters (learning rates should be in a range \[ 0, 1\] and sensitivities should be in a range \[ 0, 8 \]), however Daw (2009)3 argues against that.

Code

1all_mle_params = []

2

3for i in range(10):

4 # generate random initial values

5 np.random.seed(i)

6 alpha0 = list(np.random.random(size=(2,)).round(2))

7 sens0 = list(np.random.randint(low=0, high=80, size=(2,)) / 10)

8 x0 = alpha0 + sens0

9

10 mle_output = mle_fit(

11 x0=x0, fun=log_lik,

12 params=params,

13 bounds=((0, 1), (0, 1), (0, 8), (0, 8)))

14

15 all_mle_params.append(mle_output)

16

17selected_params = best_params(all_mle_params)

Fit with x0: [0.55, 0.72, 6.7, 6.7]: 100%|██████████| 20/20 [00:38<00:00, 1.91s/it]

Fit with x0: [0.42, 0.72, 0.9, 7.5]: 100%|██████████| 20/20 [00:36<00:00, 1.81s/it]

Fit with x0: [0.44, 0.03, 2.2, 4.3]: 100%|██████████| 20/20 [00:35<00:00, 1.78s/it]

Fit with x0: [0.55, 0.71, 5.6, 7.2]: 100%|██████████| 20/20 [00:27<00:00, 1.38s/it]

Fit with x0: [0.97, 0.55, 0.1, 7.2]: 100%|██████████| 20/20 [00:45<00:00, 2.25s/it]

Fit with x0: [0.22, 0.87, 1.6, 7.3]: 100%|██████████| 20/20 [00:34<00:00, 1.74s/it]

Fit with x0: [0.89, 0.33, 7.9, 6.2]: 100%|██████████| 20/20 [00:39<00:00, 1.98s/it]

Fit with x0: [0.08, 0.78, 6.7, 2.3]: 100%|██████████| 20/20 [00:34<00:00, 1.75s/it]

Fit with x0: [0.87, 0.97, 0.5, 0.8]: 100%|██████████| 20/20 [00:38<00:00, 1.91s/it]

Fit with x0: [0.01, 0.5, 5.4, 5.6]: 100%|██████████| 20/20 [00:41<00:00, 2.09s/it]

Once we have selected the best fitting values, the following questions may arise:

- Are we sure that the selected values represent the global minimum? We are not sure.

- Were 10 initial starting points enough? We don’t know.

- Would another optimization method find a better set of parameters? It could, but the overview of different optimization methods is beyond the scope of this article.

It may sound vague, but that’s the sad truth about MLE, we are never sure that we’ve found the best parameters. Although, by trying different methods and initial values we can make it as good as possible.

Code

1# convert dict to dataframe

2mle_params_df = pd.DataFrame(selected_params).T.reset_index(drop=False)

3mle_params_df.rename(columns={'index': 'subjID'}, inplace=True)

4mle_params_df['group'] = mle_params_df['subjID'].apply(

5 lambda x: 'Control' if x in ids['Control'] else (

6 'Treatment' if x in ids['Treatment'] else 'Undefined'

7 )

8)

1# plot estimated parameters

2plt.figure(figsize=(11,6))

3for (i, param) in enumerate(params):

4 plt.subplot(2,2,i+1)

5 sns.boxplot(

6 y=param, x='group',

7 data=mle_params_df, linewidth=3,

8 palette='Set3')

9 sns.stripplot(

10 y=param, x='group', data=mle_params_df,

11 jitter=0.1, linewidth=2, palette='Set3')

12 if i < 2:

13 plt.xlabel('')

14 plt.ylim([-0.05, 1.05]) # use the same scale for each parameter type

15 else:

16 plt.xlabel('Group', fontweight='bold')

17 plt.ylim([0, 12])

18

19 plt.ylabel('')

20 plt.title(param_names[param], fontweight='bold')

21

22plt.suptitle('Estimated parameters of agents (MLE)', fontsize=15, fontweight='bold')

23plt.tight_layout()

24plt.show()

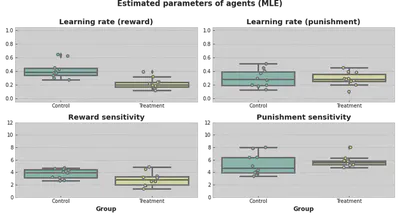

Now, we can actually estimate how good the selected parameters are.

Code

1# plot estimated parameters vs true parameters

2plt.figure(figsize=(8,8))

3

4for (i,param) in enumerate(params):

5

6 plt.subplot(2,2,i+1)

7 plt.axis('square')

8 sns.scatterplot(x=mle_params_df[param], y=true_params_df[param])

9

10 r_coef = pg.corr(true_params_df[param], mle_params_df[param])['r'][0]

11 p_val = pg.corr(true_params_df[param], mle_params_df[param])['p-val'][0]

12

13 if i < 2:

14 lim = [0,1]

15 plt.plot(lim, lim, ls="--", c=".3")

16 plt.xlim(lim)

17 plt.ylim(lim)

18 else:

19 lim = [0,10]

20 plt.plot(lim, lim, ls="--", c=".3")

21 plt.xlim(lim)

22 plt.ylim(lim)

23

24 plt.xlabel('MLE', fontweight='bold')

25 plt.ylabel('True', fontweight='bold')

26 plt.title(

27 f'{param_names[param]}\nr = {r_coef:.2f} (p-val = {p_val:.2f})',

28 fontweight='bold')

29

30plt.tight_layout()

31plt.show()

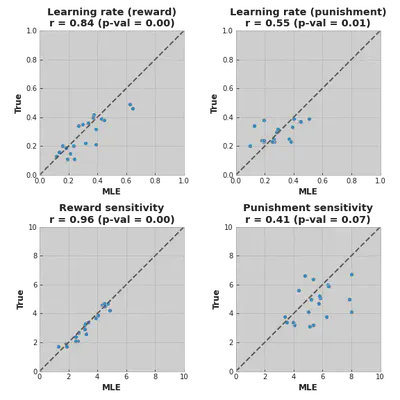

In the perfect world, all dots would lie on a diagonal line, meaning that fitted values (on the axis) match the real values (on the axis). In our case, we can see that MLE fit recovered values for reward sensitivity quite accurately, whereas punishment sensitivity values are not that aligned.

Another caution is that we have 2 estimated values for punishment sensitivity that are equal to or are really close to 8, which was the upper threshold for the optimizing function. This could mean that a better fitting value lies beyond the threshold value.

Model Comparison

By this point we have found the set of parameters for a model that fit data quite well, but what if our model is wrong? MLE will always return a set of best-fitting parameters, but if the chosen model doesn’t represent the actual mechanism of subjects’ decision making, the results are unreliable. One can deal with such an issue by trying out several models and comparing them.

What if our subjects don’t really have the “competing” sides of positive and negative feedback and the learning rate and sensitivity are the same for both types of an outcome? We will also fit model to data and call this model “No Competition”. Another possible scenario is that subjects don’t update the action values for non-selected actions. We will call this model “No update”. The model that we have fit before will be called “Full Model”.

Now, in order to check these models, we just have to change the log likelihood function that we want to optimize. Once we find the likelihoods of the model, we can compare the models. There are several ways of doing it, but the most common ones are with the help of Akaike information criterion (AIC) or Bayesian information criterion (BIC):

- - number of estimated parameters in the model

- - maximum value of the likelihood function for the model

- - the number of observations (number of trials in our case)

The first part for both equations (before the minus sign) represents the model complexity, whereas the second part (after the minus sign) represents the model performance. Basically, both criteria are looking for a better performing and less complex model. In practice, the lower the values, the better.

Model algorithm for “No Competition” model:

- for each step in episode do

- Select action using softmax policy

- Observe reward and punishment values. Outcome

- for each action in possible actions do

- if == do

- else

- end

- if == do

- end

- end

Model algorithm for “No Update” model:

- for each step in episode do

- Select action using softmax policy

- Observe reward and punishment values

- end

Code

1def log_lik_no_compet(x, *args):

2 """

3 Sum of negative log likelihoods over all trials for "No Competition" model.

4

5 Arguments

6 ----------

7 x : tuple, list

8 Parameters to estimate by MLE fit.

9

10 args: tuple, list

11 Input parameters for the model.

12

13 Returns

14 ----------

15 Sum of negative log likelihoods.

16 """

17

18 alpha, outcome_sens = x # parameters to estimate

19 n_arms, actions, gain, loss = args # input values

20

21 Qs = np.ones(shape=(n_arms,))

22 Qs /= n_arms

23

24 log_prob_actions = np.zeros(shape=(len(actions), n_arms))

25

26 for i, (a, g, l) in enumerate(zip(actions, gain, loss)):

27

28 r = g + l

29

30 a_left = list(range(n_arms)) # actions that were not selected

31 a_left.remove(a)

32

33 log_prob_action = log_softmax(Qs)

34 log_prob_actions[i] = log_prob_action[a]

35

36 Qs[a] += alpha * (outcome_sens*r - Qs[a])

37 for a_l in a_left:

38 Qs[a_l] += alpha*(-Qs[a_l])

39

40 return -np.sum(log_prob_actions)

41

42

43def log_lik_no_update(x, *args):

44 """

45 Sum of negative log likelihoods over all trials for "No Update" model.

46

47 Arguments

48 ----------

49 x : tuple, list

50 Parameters to estimate by MLE fit.

51

52 args: tuple, list

53 Input parameters for the model.

54

55 Returns

56 ----------

57 Sum of negative log likelihoods.

58 """

59

60 alpha_rew, alpha_pun, rew_sens, pun_sens = x # parameters to estimate

61 n_arms, actions, gain, loss = args # input values

62

63 Q_rew = np.ones(shape=(n_arms,))

64 Q_rew /= n_arms

65

66 Q_pun = np.ones(shape=(n_arms,))

67 Q_pun /= n_arms

68

69 log_prob_actions = np.zeros(shape=(len(actions), n_arms))

70

71 for i, (a, g, l) in enumerate(zip(actions, gain, loss)):

72

73 log_prob_action = log_softmax(Q_rew + Q_pun)

74 log_prob_actions[i] = log_prob_action[a]

75

76 Q_rew[a] += alpha_rew * (rew_sens*g - Q_rew[a])

77 Q_pun[a] += alpha_pun * (pun_sens*l - Q_pun[a])

78

79 return -np.sum(log_prob_actions)

80

81

82def aic(k, L):

83 """

84 Get the value of Akaike information criterion (AIC).

85

86 Arguments

87 ----------

88 k : int

89 Number of estimated parameters in the model

90

91 L : float

92 Logarithm of a maximum value of the likelihood function for the model

93

94 Returns

95 ----------

96 AIC value

97 """

98

99 return 2*k - 2*L

100

101def bic(k, n, L):

102 """

103 Get the value of Bayesian information criterion (BIC).

104

105 Arguments

106 ----------

107 k : int

108 Number of estimated parameters in the model.

109

110 n : int

111 The number of observations.

112

113 L : float

114 Logarithm of a maximum value of the likelihood function for the model

115

116 Returns

117 ----------

118 BIC value

119 """

120

121 return k*np.log(n) - 2*L

For both new models, we will also fit 10 different initial parameters and select the optimal ones before comparing models between each other.

Code

1all_mle_params_no_compet = []

2

3for i in range(10):

4 # generate random initial values

5 np.random.seed(i)

6 alpha0 = round(np.random.random(), 2)

7 sens0 = np.random.randint(low=0, high=80) / 10

8 x0 = [alpha0, sens0]

9

10 mle_output = mle_fit(

11 x0=x0, fun=log_lik_no_compet,

12 params=['alpha', 'outcome_sens'],

13 bounds=((0, 1), (0, 8)))

14

15 all_mle_params_no_compet.append(mle_output)

16

17selected_params_no_compet = best_params(all_mle_params_no_compet)

Fit with x0: [0.55, 6.4]: 100%|██████████| 20/20 [00:12<00:00, 1.61it/s]

Fit with x0: [0.42, 1.2]: 100%|██████████| 20/20 [00:16<00:00, 1.18it/s]

Fit with x0: [0.44, 7.2]: 100%|██████████| 20/20 [00:09<00:00, 2.08it/s]

Fit with x0: [0.55, 0.3]: 100%|██████████| 20/20 [00:20<00:00, 1.01s/it]

Fit with x0: [0.97, 5.5]: 100%|██████████| 20/20 [00:09<00:00, 2.16it/s]

Fit with x0: [0.22, 6.1]: 100%|██████████| 20/20 [00:12<00:00, 1.63it/s]

Fit with x0: [0.89, 7.9]: 100%|██████████| 20/20 [00:08<00:00, 2.29it/s]

Fit with x0: [0.08, 2.5]: 100%|██████████| 20/20 [00:14<00:00, 1.39it/s]

Fit with x0: [0.87, 0.5]: 100%|██████████| 20/20 [00:19<00:00, 1.01it/s]

Fit with x0: [0.01, 5.4]: 100%|██████████| 20/20 [00:24<00:00, 1.24s/it]

1all_mle_params_no_update = []

2

3for i in range(10):

4 # generate random initial values

5 np.random.seed(i*10)

6 alpha0 = list(np.random.random(size=(2,)).round(2))

7 sens0 = list(np.random.randint(low=0, high=80, size=(2,)) / 10)

8 x0 = alpha0 + sens0

9

10 mle_output = mle_fit(

11 x0=x0, fun=log_lik_no_update,

12 params=params,

13 bounds=((0, 1), (0, 1), (0, 8), (0, 8)))

14

15 all_mle_params_no_update.append(mle_output)

16

17selected_params_no_update = best_params(all_mle_params_no_update)

Fit with x0: [0.55, 0.72, 6.7, 6.7]: 100%|██████████| 20/20 [00:18<00:00, 1.10it/s]

Fit with x0: [0.77, 0.02, 6.4, 2.8]: 100%|██████████| 20/20 [00:15<00:00, 1.28it/s]

Fit with x0: [0.59, 0.9, 2.8, 0.9]: 100%|██████████| 20/20 [00:25<00:00, 1.27s/it]

Fit with x0: [0.64, 0.38, 1.2, 2.3]: 100%|██████████| 20/20 [00:35<00:00, 1.79s/it]

Fit with x0: [0.41, 0.06, 5.6, 5.0]: 100%|██████████| 20/20 [00:22<00:00, 1.10s/it]

Fit with x0: [0.49, 0.23, 3.3, 0.4]: 100%|██████████| 20/20 [00:32<00:00, 1.60s/it]

Fit with x0: [0.3, 0.19, 1.5, 7.2]: 100%|██████████| 20/20 [00:44<00:00, 2.21s/it]

Fit with x0: [0.93, 0.87, 6.0, 5.9]: 100%|██████████| 20/20 [00:17<00:00, 1.15it/s]

Fit with x0: [0.52, 0.7, 1.0, 5.0]: 100%|██████████| 20/20 [00:45<00:00, 2.26s/it]

Fit with x0: [0.15, 0.16, 6.7, 3.9]: 100%|██████████| 20/20 [00:46<00:00, 2.34s/it]

1# combine selected value for each model in one list

2all_models = [selected_params, selected_params_no_update, selected_params_no_compet]

3model_names = ['Full Model', 'No Update', 'No Competition']

4

5metrics = {}

6# dictionary will look like

7# {<subject>:

8# {<Model Name>:

9# {'AIC': <value>},

10# {'BIC': <value>}

11# }

12# }

13

14for subj in itertools.chain(*ids.values()):

15

16 metrics[subj] = {}

17

18 for (i,m) in enumerate(all_models):

19

20 metrics[subj][model_names[i]] = {}

21

22 metrics[subj][model_names[i]]['AIC'] = int(aic(

23 k=len(m[subj])-1,

24 L=-m[subj]['likelihood']))

25

26 metrics[subj][model_names[i]]['BIC'] = int(bic(

27 k=len(m[subj])-1,

28 n=n_trials,

29 L=-m[subj]['likelihood']))

1# convert dictionary to data frame for better visualization

2metrics_df = pd.DataFrame({})

3

4for (i, subj) in enumerate(itertools.chain(*ids.values())):

5 temp = pd.DataFrame(metrics[subj]).reset_index(drop=False)

6 temp.rename(columns={'index': 'metric'}, inplace=True)

7 temp['subjID'] = subj

8 metrics_df = metrics_df.append(temp)

9

10metrics_df.set_index(['subjID', 'metric']).style.highlight_min(

11 axis=1,

12 props='color:black; font-weight:bold; background-color:lightgreen;')

| Full Model | No Update | No Competition | ||

|---|---|---|---|---|

| subjID | metric | |||

| 1 | AIC | 1696 | 1807 | 1692 |

| BIC | 1709 | 1821 | 1699 | |

| 2 | AIC | 1257 | 1479 | 1281 |

| BIC | 1270 | 1492 | 1288 | |

| 3 | AIC | 1044 | 1262 | 1054 |

| BIC | 1057 | 1275 | 1060 | |

| 4 | AIC | 1802 | 1922 | 1806 |

| BIC | 1816 | 1935 | 1812 | |

| 5 | AIC | 863 | 1046 | 882 |

| BIC | 876 | 1059 | 889 | |

| 6 | AIC | 924 | 1058 | 930 |

| BIC | 937 | 1072 | 936 | |

| 7 | AIC | 1470 | 1733 | 1516 |

| BIC | 1483 | 1747 | 1523 | |

| 8 | AIC | 1097 | 1253 | 1098 |

| BIC | 1110 | 1266 | 1105 | |

| 9 | AIC | 1066 | 1472 | 1089 |

| BIC | 1079 | 1485 | 1095 | |

| 10 | AIC | 1710 | 1821 | 1749 |

| BIC | 1724 | 1834 | 1756 | |

| 11 | AIC | 1987 | 2147 | 2064 |

| BIC | 2000 | 2160 | 2070 | |

| 12 | AIC | 1805 | 1874 | 1857 |

| BIC | 1818 | 1887 | 1864 | |

| 13 | AIC | 1967 | 2100 | 1988 |

| BIC | 1980 | 2113 | 1994 | |

| 14 | AIC | 1455 | 1572 | 1489 |

| BIC | 1468 | 1585 | 1495 | |

| 15 | AIC | 998 | 1135 | 1042 |

| BIC | 1011 | 1148 | 1049 | |

| 16 | AIC | 1975 | 2050 | 2035 |

| BIC | 1988 | 2064 | 2042 | |

| 17 | AIC | 1272 | 1410 | 1307 |

| BIC | 1285 | 1424 | 1314 | |

| 18 | AIC | 1897 | 2039 | 1927 |

| BIC | 1911 | 2052 | 1933 | |

| 19 | AIC | 1889 | 1932 | 1894 |

| BIC | 1902 | 1946 | 1901 | |

| 20 | AIC | 1627 | 1724 | 1658 |

| BIC | 1640 | 1737 | 1664 |

As one can see from the results table, BIC and AIC values don’t always agree. And even though “No Competition” model showed lower BIC and AIC values for some of the subjects, we will select the “Full Model” as a winning one, since it fits better for the majority of subjects.

Comparison to the Random Choice Model

One can also check whether the subject performed actions at random with the help of “classical” statistical approaches, such as Chi-square test.

Our null hypothesis is that actions were selected at random. If this was true in our case, then for 200 trials, we would see 50 selections of each option. An alternative hypothesis is that number of selections of each option is different from 50. Significance level is going to be 0.05. We will perform the Chi-square test for each subject separately.

Code

1alpha = 0.05

2chisq_results = {}

3

4for subj in itertools.chain(*ids.values()):

5 # get the list of observed counts of choices

6 obs = agent_data[agent_data['subjID'] == subj]['choice']\

7 .value_counts()\

8 .sort_index()\

9 .to_list()

10 chisq_results[subj] = chisquare(f_obs=obs)[1]

11

12if np.sum(np.array(list(chisq_results.values())) < alpha) == n_subj*2:

13 print('Rejecting the null hypothesis for all subjects.')

14else:

15 print('Some subject may have chosen actions at random.')

Rejecting the null hypothesis for all subjects.

Results above show that we were able to reject the null hypothesis for all the subjects, meaning that the counts of choices are not equal (or in other words, choices were not selected at random) at the significance level of 0.05.

Model Validation

After we have selected the model, we could proceed to the model validation. In this step, we try to replicate the original data with the selected parameters and check whether they fit. We will use the same function generate_agent_data that we used to generate the initial set using the estimated values from MLE results and see how artificial data differs from the original one. As a measure of comparison, we can check the ratio of selection of 4-th window (the one with the highest reward probability and lowest punishment probability). This will allow us to indirectly compare the action value functions (if estimated parameters were close to the real parameters, the action value function over the trials would be approximately the same and thus the probability of choosing each option would also be roughly the same).

Code

1# data generation

2sim_data = pd.DataFrame()

3

4for subj in itertools.chain(*ids.values()):

5

6 actions, gain, loss, Qs = generate_agent_data(

7 alpha_rew=selected_params[subj]['alpha_rew'],

8 alpha_pun=selected_params[subj]['alpha_pun'],

9 rew_sens=selected_params[subj]['R'],

10 pun_sens=selected_params[subj]['P'],

11 rew_prob=rew_probs,

12 pun_prob=pun_probs,

13 rew_vals=rew_vals,

14 n_trials=n_trials)

15

16 temp_df = pd.DataFrame({

17 'subjID': subj, 'choice': actions+1, 'gain': gain, 'loss': loss})

18

19 sim_data = sim_data.append(temp_df)

1plt.figure(figsize=(10,20))

2for (j, subj) in enumerate(itertools.chain(*ids.values())):

3 cumavg_true = []

4 cumavg_sim = []

5

6 plt.subplot(10, 2, j+1)

7 for i in range(n_trials):

8 cumavg_true.append(np.mean(agent_data[agent_data['subjID'] == subj]['choice'][:i+1] == 4))

9 cumavg_sim.append(np.mean(sim_data[sim_data['subjID'] == subj]['choice'][:i+1] == 4))

10

11 plt.plot(cumavg_true, color='blue', label='True')

12 plt.plot(cumavg_sim, color='red', label='Simulated')

13 plt.legend()

14 plt.title(f'Subject {subj}', fontweight='bold')

15 plt.ylim([0,1])

16 if (j+2) % 2 == 0: # for odd indeces

17 plt.ylabel('$P(a_t = a_4)$')

18

19 if j in [18, 19]:

20 plt.xlabel('Trial', fontweight='bold')

21

22plt.suptitle('Probability of choosing the 4th arm', y = 1.01, fontsize=15, fontweight='bold')

23plt.tight_layout()

24plt.show()

Probabilities (of ratio) of selection of the 4th option don’t always perfectly match between true and simulated values, but it’s fairly close. We can make a conclusion, that model captured the real behavior pretty well.

Groups Comparison

Once we have selected the model and confirmed, that it can reproduce the real behavioral data, we can come to the main research question we had in the beginning: does treatment change the reward sensitivity and learning rate for a positive outcome?

We have our selected parameters for each subject, now it comes again to the statistical analysis. We will use a two-sample -test to see whether there is a significant difference between the samples of parameters at the significance level of 0.05.

Results

Code

1for param in params:

2 print(f"==Results for {param_names[param]}==")

3 ttest_res = pg.ttest(

4 x=mle_params_df[mle_params_df['group'] == 'Control'][param],

5 y=mle_params_df[mle_params_df['group'] == 'Treatment'][param])

6 display(ttest_res)

7 print()

Results for learning rate (reward):

| T | dof | alternative | p-val | CI95% | cohen-d | BF10 | power | |

|---|---|---|---|---|---|---|---|---|

| T-test | 4.188352 | 18 | two-sided | 0.000552 | [0.1, 0.3] | 1.873088 | 48.259 | 0.977033 |

Results for learning rate (punishment):

| T | dof | alternative | p-val | CI95% | cohen-d | BF10 | power | |

|---|---|---|---|---|---|---|---|---|

| T-test | 0.169418 | 18 | two-sided | 0.867357 | [-0.1, 0.12] | 0.075766 | 0.401 | 0.052959 |

Results for reward sensitivity:

| T | dof | alternative | p-val | CI95% | cohen-d | BF10 | power | |

|---|---|---|---|---|---|---|---|---|

| T-test | 1.981033 | 18 | two-sided | 0.063076 | [-0.05, 1.79] | 0.885945 | 1.463 | 0.466243 |

Results for punishment sensitivity:

| T | dof | alternative | p-val | CI95% | cohen-d | BF10 | power | |

|---|---|---|---|---|---|---|---|---|

| T-test | -0.751089 | 18 | two-sided | 0.462306 | [-1.77, 0.84] | 0.335897 | 0.485 | 0.109741 |

-test results showed that there is only a significant difference between the learning rate for a reward with the large effect size. One could argue that difference for reward sensitivity is also statistically different with the medium effect size.

Another general issue with this approach is that each estimated value of the parameter has its own value of uncertainty (which can be estimated using the inverse of Hessian matrix), but by doing the -test (or another alternative test) we are losing this uncertainty and use the values as if they were fixed. Bayesian approach doesn’t have this issue and we can see it in the 2nd part of the article.

Summary and Limitations

We have looked at how one can fit RL model to the behavioral data using MLE approach and select the best fitting values. Then we have introduced the idea of models comparison and model validation. Lastly, we have used statistical analysis to answer the research question using selected model parameters.

However, there are several limitations of this review. First of all, data was artificially generated and parameters were selected for simplicity from a given range. Real behavioral data can be much noisier.

Secondly, it is worth mentioning, that the initial data was generated with the research question in mind (cheating) to make it more exciting, that’s why the results came up significantly different. Although it doesn’t change the modeling and analysis steps.

►Computational Models of Behavior, part 2: Bayesian Inference

References

Schultz W. (1998). Predictive reward signal of dopamine neurons. Journal of neurophysiology, 80(1), 1–27. https://doi.org/10.1152/jn.1998.80.1.1 ↩︎

Lockwood, P. L., & Klein-Flügge, M. C. (2021). Computational modelling of social cognition and behaviour-a reinforcement learning primer. Social cognitive and affective neuroscience, 16(8), 761–771. https://doi.org/10.1093/scan/nsaa040 ↩︎

Daw, N. (2011). Trial-by-trial data analysis using computational models. Affect, Learning and Decision Making, Attention and Performance XXIII. 23. https://doi.org/10.1093/acprof:oso/9780199600434.003.0001 ↩︎ ↩︎

Wilson, R. C., & Collins, A. G. (2019). Ten simple rules for the computational modeling of behavioral data. eLife, 8, e49547. https://doi.org/10.7554/eLife.49547 ↩︎

Seymour, B., Daw, N. D., Roiser, J. P., Dayan, P., & Dolan, R. (2012). Serotonin selectively modulates reward value in human decision-making. The Journal of neuroscience : the official journal of the Society for Neuroscience, 32(17), 5833–5842. https://doi.org/10.1523/JNEUROSCI.0053-12.2012 ↩︎